Human agent reasoning using Controlled Natural Language

Military / Coalition Issue

Data captured by humans is often expressed in semi-structured or unstructured text making it difficult to extract knowledge for integration within a common operational picture.

Core idea and key achievements

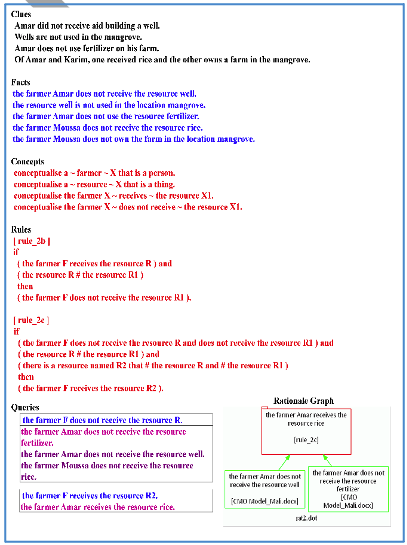

Conceptual model and initial natural language processing capability for use in fact extraction and human-AI agent problem-solving. This system uses Controlled English (CE), a machine-readable and human understandable language for fact extraction, and syntactic and semantic analysis, and may be used to represent concepts, facts, assumptions, and logical inference rules indicating relationship constraints. The CE system enables user to represent and evaluate the reasoning steps and rationale leading to conclusions, with the aim of identifying inconsistent hypotheses and cognitive biases. The CE system generates “rationale graphs” using natural language to illustrate the reasoning steps, the facts and premises leading to an inference. The CE system has been applied to numerous logic problems, most recently a food security problem for coalition partners to resolve.

Implications for Defence

In addition to natural language processing for fact extraction from semi-structured or unstructured data, multiple hypotheses can be tested iteratively using the CE system to generate rationale graphs. Unlike machine learning algorithms that may be challenging for humans to understand, the CE system’s formal representation of information in context and use of rationale graphs provide a level of transparency within human-AI agent reasoning to facilitate detection of inconsistent hypotheses and cognitive biases, such as the confirmation bias.

Readiness & alternative Defence uses

TRL 3. Conceptual model and CE system with base functionality. Collaborative Planning Model (CPM) was developed using a prior iteration of the CE system to represent and exchange the rationale for sub-plans between coalition planners. In a dynamic coalition planning experiment with Soldiers representing elements of a US and UK distributed Brigade, anecdotal evidence suggested that CPM facilitated the identification of inconsistent hypotheses within sub-plans.

Resources and references

- Cheryl Giammanco and David Mott. Human-Agent Reasoning about Civil-Military Consideration Using Controlled Natural Language

- Patel, Jitu, Michael C. Dorneich, David Mott, Ali Bahrami, and Cheryl Giammanco. “Making Plans Alive.” Proc. 6th Knowledge Systems for Coalition Operations (2010).

Organisations

IBM UK, ARL DEVCOM