State-Action Separable and Embedding for Reinforcement Learning

Military / Coalition Issue

Reinforcement learning (RL) has been widely applied for system control in many domains. However, state-of-the-art RL often encounters issues such as huge state-action spaces, inefficient knowledge representation of states and actions, and violation of underlying model assumptions. As a result, RL techniques are often computationally complex and require long learning time, thus limiting their applicability to national defence.

Core idea and key achievements

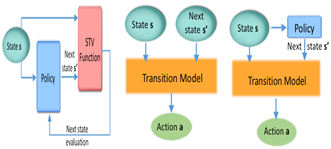

The DAIS team has developed new techniques to address these RL issues, including state-action space explosion, inefficient knowledge representation of states and actions, learning with sudden changes in operating environments, and violation of underlying model assumptions. We focus here on two new techniques. First, a new technique called state-action separable RL (sasRL) has been proposed to separate state transitions from actions taken, while considering impact of actions through simple supervised learning, thus alleviating the issue of large state-action spaces. Second, a new embedding approach has been developed that uses a model of the environment to obtain joint embeddings for states and actions, from which the optimal policy can be learned. In this way, the embedded representations obtained enable better generalization over both states and actions by capturing similarities in the embedding spaces. Both new techniques greatly reduce the computation and learning time, thus extending the applicability of RL. Please also see the related DAIS Outcomes on “RL for Network Control” and “Joint Reinforcement and Transfer Learning for Fragmented SDC.”

Key achievements include the development of:

- sasRL technique to overcome huge state-action spaces

- Jointly trained state-action embedding to speed up learning for RL

Implications for Defence

The new techniques will enable defence to apply RL for real-time control and sharing of infrastructure assets among armed forces. They support efficient, agile and robust configuration and use of resources, which are unmatched by our adversaries. The techniques are also applicable to other systems such as radio spectrum sharing in electromagnetic warfare.

Readiness & alternative Defence uses

TRL 2/3. Both new techniques have been applied to practical problems studied in the literature and the sasRL has been prototyped in Leung, Kin K., et al. “Reinforcement and transfer learning for distributed analytics in fragmented software defined coalitions.” Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications III. Vol. 11746. International Society for Optics and Photonics, 2021. They can be readily applied to defence systems.

Resources and references

- Zhang, Ziyao, et al. “Efficient Reinforcement Learning with Implicit Action Space.” 4th Annual Fall Meeting of the DAIS ITA, 2020

- Pritz, Paul J., Liang Ma, and Kin K. Leung. “Joint State-Action Embedding for Efficient Reinforcement Learning.” arXiv e-prints (2020): arXiv-2010.

- Leung, Kin K., Anand Mudgerikar, Ankush Singla, Elisa Bertino, Dinesh Verma, Kevin Chan, John Melrose, and Jeremy Tucker. “Reinforcement and transfer learning for distributed analytics in fragmented software defined coalitions.” In Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications III, vol. 11746, p. 117461W. International Society for Optics and Photonics, 2021.[

Organisations

Imperial College, IBM US, Yale University, Purdue University, Dstl and ARL