Model Poisoning Attacks and Defences in Federated Learning

Military / Coalition Issue

Federated learning is being increasingly adopted for information sharing and distributed model training between coalition members while simultaneously addressing their data sensitivity concerns. It allows the coalition members to perform local model training at the edge and only share insights in the form of model parameters with a central server. The server mediates a multi-round model training protocol, between the members, to assimilate all the local information into one global model. This global model, shared between the agents, is then used for critical decision-making. It is thus imperative to analyse and assess adversarial mechanisms that can be used to introduce vulnerabilities into the global model, and ways to mitigate them.

Core idea and key achievements

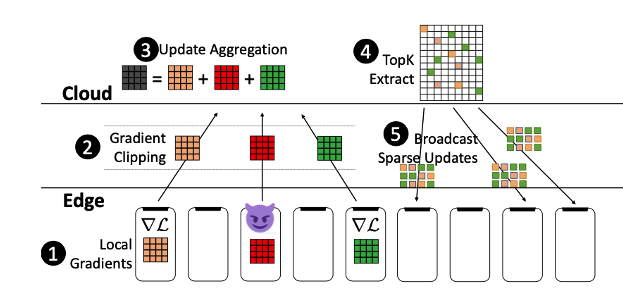

There are two key achievements. First, we proposed model-poisoning attack to introduce targeted backdoor into the global model trained using federated learning. We demonstrated that it is possible for an adversary to manipulate the model weights and introduce targeted misclassification by the global model while maintaining attack stealth. This is a class of attacks different from the more traditional Byzantine attacks considered in prior works. We then evaluated the efficacy of the attack in a p2p setting. Second, we proposed a provable defence that combines ideas of top-k sparsification together with gradient clipping to bound the adversarial impact on the global model. We performed a large-scale evaluation of both the attack (under colluding attackers) and defence effectiveness.

Implications for Defence

Understanding the risks of deploying federated learning will allow the decision maker to remain vigilant of possible attacks. Proposed defence mechanism together with secure aggregation techniques could introduce resilience and reduce the risk of deployment.

Readiness & alternative Defence uses

Attack feasibility and demonstration of defence efficiency, level 3.

Resources and references

- Bhagoji, Arjun Nitin, et al. “Analyzing federated learning through an adversarial lens.” International Conference on Machine Learning. PMLR, 2019.

- Tomsett, Richard, Kevin Chan, and Supriyo Chakraborty. “Model poisoning attacks against distributed machine learning systems.” Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications. Vol. 11006. International Society for Optics and Photonics, 2019.

- “Provably Defending Against Backdoor Attacks in Federated Learning with Sparsification” (under submission)

- https://github.com/inspire-group/ModelPoisoning

Organisations

IBM US, IBM UK, ARL, Princeton