Inconsistency in Explanation Metrics

Military / Coalition Issue

Deep learning models have been shown to perform extremely well in a variety of domains and are being increasingly used for critical decision-making. These models operate as black-boxes and rely on multiplicity of layers to extract abstract (often un-interpretable) features that are specifically tailored towards improving performance on the task. For efficient human-machine hybrid coalition networks, where the human decision maker can trust the model output, it is therefore imperative to augment model output with ``explanations’’ that introduce transparency to the model decision-making. Given this important role of explanations in establishing trust, several metrics have been proposed to assess their fidelity and faithfulness to the model decision. These metrics are important to determine the suitability of an explanation mechanism based on end-user requirement.

Core idea and key achievements

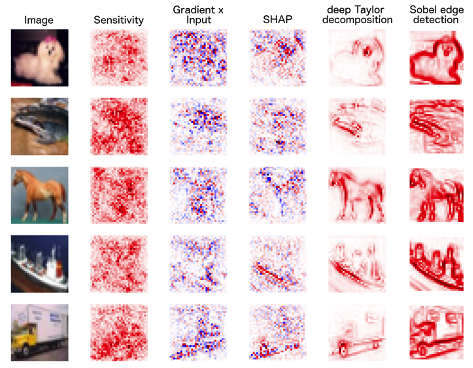

Saliency maps are used for providing post-hoc explanations. These maps are typically projections on the input domain quantifying the relevance of the input features towards the model decision. For example, in the context of images and videos, saliency maps indicate the relevance of each pixel towards the model output. Metrics have been proposed to assess the quality of saliency maps. We proposed a set of reliability measures, adopted from psychometric testing, to quantify the consistency of these saliency metrics. Using several tests, we demonstrated that (i) different metrics did not produce consistent ranking on the same sample and model, (ii) global saliency metrics, computed over all test samples also had high variance. Our results highlight that current metrics are highly unreliable and call on the community to develop better saliency metrics.

Implications for Defence

While explanations are being mandated as part of several regulatory policies (e.g., GDPR), their reliability and consistency also plays a key role in bootstrapping trust between human and machines.

Readiness & alternative Defence uses

Conceptual understanding demonstrated via initial experiments, level 1.

Resources and references

- Tomsett, Richard, et al. “Sanity checks for saliency metrics.” Proceedings of the AAAI conference on artificial intelligence. Vol. 34. No. 04. 2020.

Organisations

IBM UK, IBM US, Cardiff, ARL