Uncertainty-Aware Artificial Intelligence and Machine Learning

Military / Coalition Issue

Military coalitions must operate in dynamic and contested environments with constrained sharing policies leading to limited data to adapt AI systems. This leads to the possibility of high epistemic uncertainty that causes AI to makes poor recommendation potentially leading to disastrous decision-making.

Core idea and key achievements

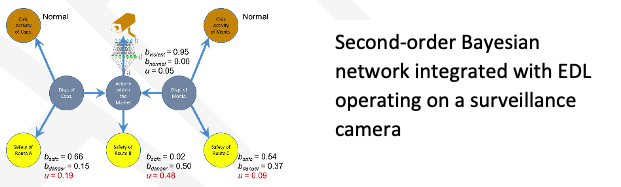

Developed methods to extract the epistemic uncertainty inherent in neuro-symbolic AI&ML systems trained with limited data. At the symbolic layer exact inference algorithms have been modified to percolate second-order probabilities to enable the answering of queries with confidence bounds.

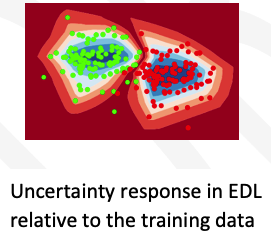

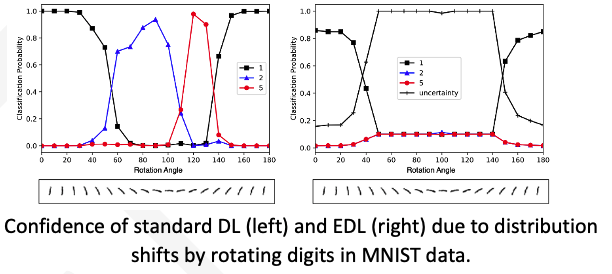

At the neural layer, evidential deep learning (EDL) is specially trained to characterize the amount of relevant evidence for the various alternatives in light of the input (sensor) data and the data to train the AI network. In many different applications, it is demonstrated that EDL can detect out-of-distribution test samples. Furthermore, accuracy increases when deferring decision-making on highly uncertain test data.

Implications for Defence

The EDL framework can be applied to numerous target classification systems. It allows such a systems to alert decision makers when it no longer is able to provide reliable recommendations, which is possibly due to changes in the operational environment relative to how the system was trained.

This enables decision makers to rely on the system only when it is reliable.

Readiness & alternative Defence uses

Provides a framework for training deep learning systems to be uncertainty-aware. Presented at UK Defence’s AI Fest 3 and concepts embraced by AI research & development community. Initial methods have been tested on simulated and academic data sets. Work is needed to develop relevant military data sets for evaluation and advancement of the training framework and inference methods that enable uncertainty-aware AI & ML.

Resources and references

- More details can be found on the Evidential Learning and Reasoning (ELR) asset page

- Cerutti, Federico, et al. “Probabilistic logic programming with beta-distributed random variables.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 33. No. 01. 2019.

- Sensoy, Murat, Lance Kaplan, and Melih Kandemir. “Evidential deep learning to quantify classification uncertainty.” arXiv preprint arXiv:1806.01768 (2018).

- Sensoy, Murat, et al. “Uncertainty-aware deep classifiers using generative models.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 34. No. 04. 2020.

- EDL github

- SLProbLog github

Organisations

Cardiff University, ARL