Achieving Rapid Trust of Adaptable Artificial Intelligence Systems

Military / Coalition Issue

Military operations typically involve working with partners to resolve rapidly evolving situations where adversaries are adapting their tactics, techniques and procedures, and the behaviour of the civilian population is changing. Thus, military AI systems will need to learn as operations proceed and users will be exposed to partner’s AI systems. Thus, users must be able to rapidly understand the capabilities and limitations of such AI system when making high-stakes decisions.

Core idea and key achievements

Rapidly achieving appropriate trust can be achieved by designing AI systems to be:

- interpretable, revealing to the user what they know;

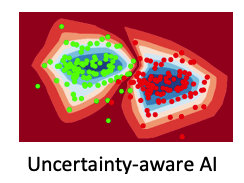

- uncertainty-aware, revealing to the user what they do not know.

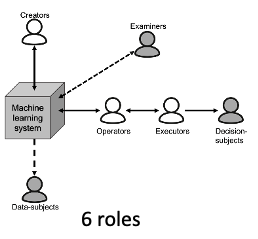

The interpretability of AI systems is a well-recognised problem; this research has highlighted the importance of uncertainty-aware AI systems (particularly for military operations), and how these two interact. Further, such trust calibration must be appropriate to the role of the user. The research has enumerated 6 roles covering the spectrum of use cases (within literature on interpretable AI) and provided 6 scenarios which demonstrate how these roles interact with an AI system (including how the role influences the interpretability required).

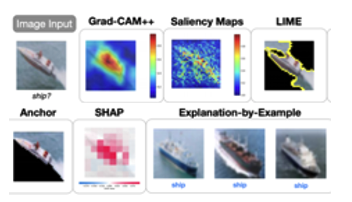

The research also examined end-user explanation preferences and found that explanation-by-example was preferred in image, audio and sensory modalities, whereas LIME was preferred for text classification.

Implications for Defence

These techniques will allow users to quickly obtain a sufficiently accurate mental model of the AI system, allowing them to understand when and when not to trust its outputs. Supports the safe use of AI systems by formalising the design, implementation and testing of trust in AI. Enables Defence to adopt AI systems which learn and adapt at the ‘pace of the fight’ – necessary to ensure the users’ tempo of understanding and action is not over-matched by their adversaries.

Readiness & alternative Defence uses

Provides the high level design requirements and a framework for the design, implementation and testing of military AI systems. Presented at UK Defence’s AI Fest 3 and concepts embraced by AI research & development community. The entries on ‘Uncertainty-aware AI’ and ‘Adapting AI systems to recognise new patterns of distributed activity’ provide further details.

Resources and references

- Rapid Trust Calibration through Interpretable and Uncertainty-Aware AI

- Interpretable to whom? A Role-based Model for Analyzing Interpretable Machine Learning Systems

- NeurIPS, 2020:

- How Can I Explain This To You? An Empirical Study of Deep Neural Network Explanation Methods

Organisations

IBM UK, IBM US, Cardiff University, UCLA, Dstl, ARL