Real-Time Explainable Artificial Intelligence: Time-Series and Multi-Modal Data

Military / Coalition Issue

As artificial intelligence (AI) based assets are increasingly employed in operating environments, and operators need to make decisions based on the output of such assets, they need to be able to “calibrate their trust” in the asset see also Achieving Rapid Trust of Adaptable Artificial Intelligence Systems; explainable AI (XAI) is a key part of trust calibration. Commonly, XAI is seen as a matter of being able to ask an AI system, “Why?” in response to an output. In real-time settings such as monitoring live sensor feeds it can be more convenient to see live explanations at least while an operator is becoming familiar with a new asset.

Core Idea and Key Achievements

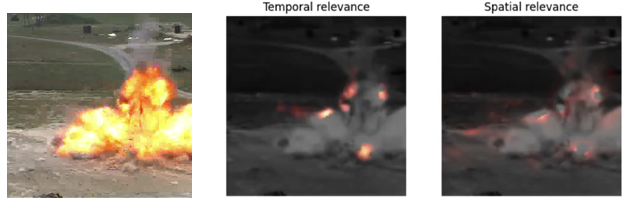

The Selective Relevance XAI technique operates in real-time on an edge processor and highlights changes in continuous time-series data, for example, between frames of a video or in an audio stream. Moreover, it can operate on multiple modalities simultaneously, filtering the most relevant and fast-changing features in a set of multimodal sensor feeds. In the images below of an explosion detected by a 3D Convolutional Neural Network, Selective Relevance highlights the origin of the blast (centre, bottom) and parts of the leading edge of the debris cloud as being the most relevant temporal (motion) features of the detected event; other elements of the debris are highlighted as being of spatial relevance (right).

Implications for Defence

By highlighting temporal and multimodal features, Selective Relevance allows an operator to receive explanations that focus on the most relevant features and modalities, i.e., highly tailored and compact explanations. These reveal what the AI is (and is not) paying attention to in a scene, across multiple senses (vision, audio, and others). The end-goal is improved trust and hence robustness of the human-machine system. Paying attention to the most relevant temporal features in multiple modalities also guards against adversarial attacks via spoof input – an attacker would need to spoof the input in multiple modalities in a way that generated false conclusions and plausible explanations for those false conclusions.

Readiness and Alternative Defence Uses

This work is technology readiness level (TRL) 3/4. Selective Relevance is available as open source software integrated into the PyTorch framework via our torchexplain package. The approach has been tested with multiple deep neural network video processing architectures including C3D, MARS, MERS and SlowFast. In addition to the default audio-video deployment (SAVR: Selective Audio Visual Relevance) Selective Relevance is applicable to other time-series data (e.g. electro-magnetic spectra and cyber traffic).

Resources and References

- Hiley, Liam, et al. “Discriminating spatial and temporal relevance in deep Taylor decompositions for explainable activity recognition.” arXiv preprint arXiv:1908.01536 (2019).

- Taylor, Harrison, et al. “VADR: Discriminative multimodal explanations for situational understanding.” 2020 IEEE 23rd International Conference on Information Fusion (FUSION). IEEE, 2020.

- Hiley, Liam, et al. “SAVR: Selective Audio Visual Relevance for Explainable Coalition Situational Understanding (demo)” DAIS Annual Fall Meeting 2020

Organisations

Cardiff University, IBM US, IBM UK, ARL