Coresets via Multi-pronged Data Reduction

- Technical achievement: Watch the video

- Demonstration: Watch the video

Military / Coalition Issue

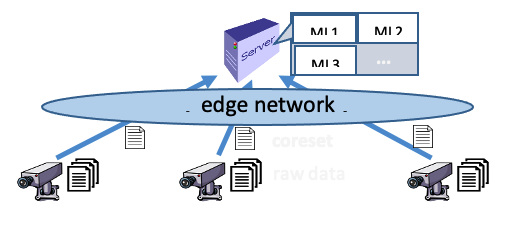

Tactical military operations often occur in poorly-connected or even adversarial networking environments where it is difficult to apply advanced ML (e.g., deep learning) to real-time data as (i) bringing data to the infrastructure is too time and bandwidth consuming, while (ii) running ML at the edge devices (e.g., mobile devices, IoT devices) collecting data is too slow and memory/power-intensive. It is thus highly desirable to have intelligent yet light-weight ways to collect small data summaries informative enough for training ML models that approximate the models trained on the full dataset.

Core idea and key achievements

Developing efficient and effective data summarization algorithms that:

- significantly reduce the data size and thus the communication cost in data collection

- provably approximate the full dataset in terms of quality of models trained on the reduced data

Our approach is to use coreset, which is a small-weighted dataset in the same feature space as the full dataset such that a model trained on the coreset approximates a model trained on the full dataset, with a controllable approximation error.

We investigate coreset-based ML in three aspects:

- robust coreset supporting multiple ML tasks,

- joint coreset + quantization + dimensionality reduction for better communication efficiency,

- efficacy in preserving privacy in comparison to federated learning,

Which progressively advance the state of the art.

Implications for Defence

In contrast to heuristic data reduction techniques such as random sampling, our coreset-based data summarization techniques extract the most informative data points and thus significantly reduce the communication cost while guaranteeing the quality of the trained models. While coreset has been used to speed up ML in centralized setting, its use for communication reduction in the training of multiple models of interest is our novel contribution. Our robust coreset can greatly benefit the real-time information extraction in the field with limited bandwidth. We are also the first to combine coreset with quantization and dimensionality reduction, which has demonstrated great promise in further reducing communication cost without compromising model quality, that is highly desirable in tactical environments. Furthermore, our recent results on privacy-quality tradeoff can be utilized in exchanging information across coalition boundaries.

Readiness & alternative Defence uses

Besides algorithms, we developed experimental implementations (code), case studies on real datasets, and a demo based on the virtual world scenario developed by IBM UK.

Resources and references

- Lu, Hanlin, et al. “Robust coreset construction for distributed machine learning.” IEEE Journal on Selected Areas in Communications 38.10 (2020): 2400-2417.

- Lu, Hanlin, et al. “Sharing Models or Coresets: A Study based on Membership Inference Attack.” arXiv preprint arXiv:2007.02977 (2020).

- Lu, Hanlin, et al. “Communication-efficient k-means for edge-based machine learning.” 2020 IEEE 40th International Conference on Distributed Computing Systems (ICDCS). IEEE, 2020.

- Lu, Hanlin, et al. “Joint coreset construction and quantization for distributed machine learning.” 2020 IFIP Networking Conference (Networking). IEEE, 2020.

- Lu, Hanlin, et al. “Robust Coreset Construction for Distributed Machine Learning.” 2019 IEEE Global Communications Conference (GLOBECOM). IEEE, 2019.

Organisations

PSU, IBM US, UCL, ARL